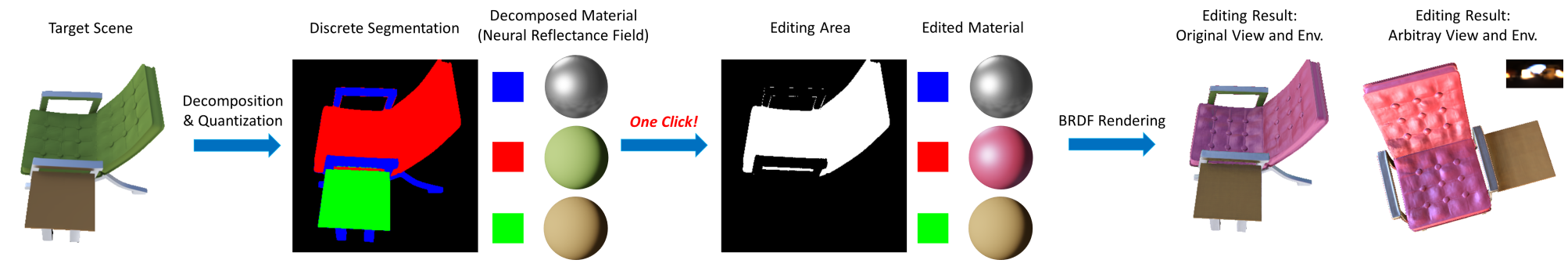

We propose VQ-NeRF, which incorporates the VQ mechanism to discretize reflectance decomposition. This enables efficient and view-consistent material selection and editing.

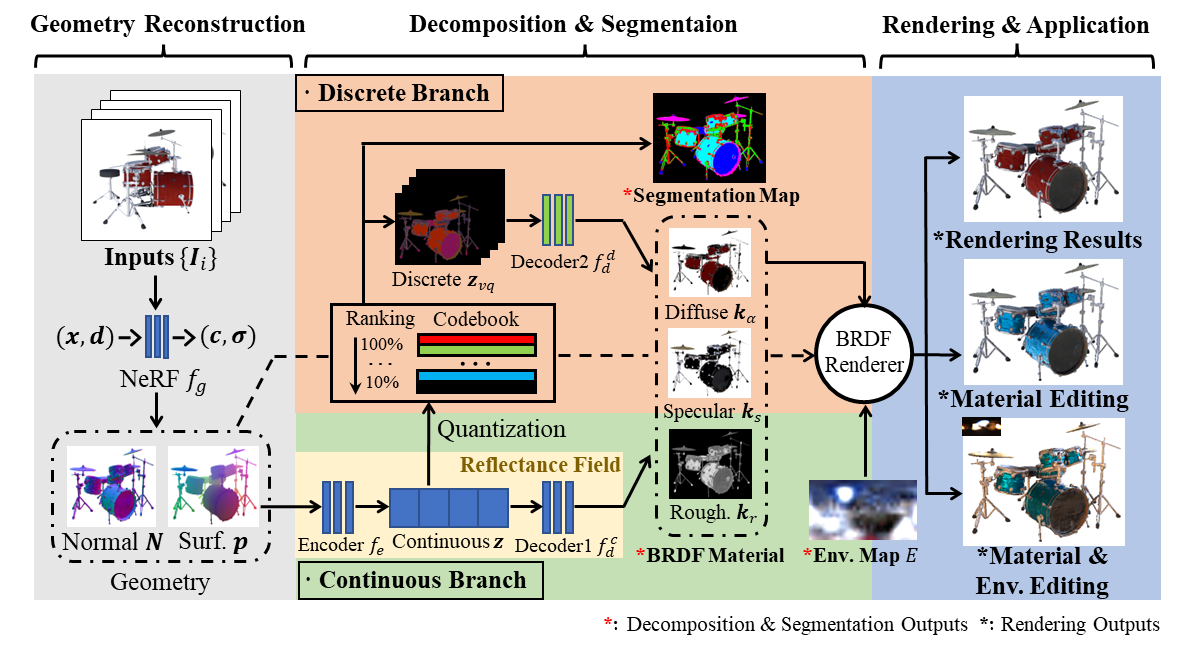

We propose VQ-NeRF, a two-branch neural network model that incorporates Vector Quantization (VQ) to decompose and edit reflectance fields in 3D scenes. Conventional neural reflectance fields use only continuous representations to model 3D scenes, despite the fact that objects are typically composed of discrete materials in reality. This lack of discretization can result in noisy material decomposition and complicated material editing. To address these limitations, our model consists of a continuous branch and a discrete branch. The continuous branch follows the conventional pipeline to predict decomposed materials, while the discrete branch uses the VQ mechanism to quantize continuous materials into individual ones. By discretizing the materials, our model can reduce noise in the decomposition process and generate a segmentation map of discrete materials. Specific materials can be easily selected for further editing by clicking on the corresponding area of the segmentation outcomes. Additionally, we propose a dropout-based VQ codeword ranking strategy to predict the number of materials in a scene, which reduces redundancy in the material segmentation process. To improve usability, we also develop an interactive interface to further assist material editing. We evaluate our model on both computer-generated and real-world scenes, demonstrating its superior performance. To the best of our knowledge, our model is the first to enable discrete material editing in 3D scenes.

Overview of our VQ-NeRF. We first take multi-view posed images as inputs and use a NeRF model (gray part) to reconstruct the scene geometry. Next, we apply a two-branch network for reflectance decomposition and material discretization. The continuous branch (green part) predicts the decomposed BRDF attributes, including diffuse, specular, and roughness, while the discrete branch (red part) uses the VQ mechanism to discretize reflectance factors. After optimization, a material map is generated, which enables us to easily select specific materials for editing.

In comparison with: NVDIFFRECMC [1], NVDIFFREC [2], and NeRFactor [3] |

[1] Shape, Light, and Material Decomposition from Images using Monte Carlo Rendering and Denoising. NeurIPS 2022. |

[2] Extracting Triangular 3D Models, Materials, and Lighting From Images. CVPR 2022. |

[3] NeRFactor: Neural Factorization of Shape and Reflectance Under an Unknown Illumination. TOG 2021. |